The Best of Both Worlds: Native Object Access Meets Parallel File System Performance

Topic : information technology | software platforms

Published on Oct 8, 2025

Over the last few decades, file storage and object storage have evolved alongside each other, and they were largely deployed for different use cases. A local application requiring high throughput may use a parallel file system, and one running in the cloud would typically use object storage. Data to be used by humans is typically best presented as files, and data for algorithmic consumption is often better presented as objects. But especially with the rise of cloud computing and AI, things aren’t so black and white anymore. Storing files and objects in separate silos creates barriers to the full use of an organization’s data.

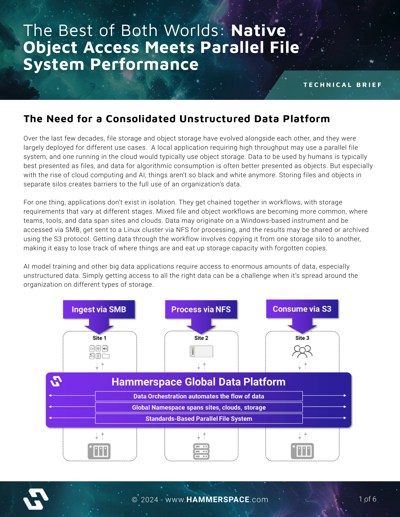

For one thing, applications don’t exist in isolation. They get chained together in workflows, with storage requirements that vary at different stages. Mixed file and object workflows are becoming more common, where teams, tools, and data span sites and clouds. Data may originate on a Windows-based instrument and be accessed via SMB, get sent to a Linux cluster via NFS for processing, and the results may be shared or archived using the S3 protocol. Getting data through the workflow involves copying it from one storage silo to another, making it easy to lose track of where things are and eat up storage capacity with forgotten copies.

AI model training and other big data applications require access to enormous amounts of data, especially unstructured data. Simply getting access to all the right data can be a challenge when it’s spread around the organization on different types of storage.

Want to learn more?

Submit the form below to Access the Resource